One of my biggest gripes about AI marketing is the talk about “zero variability” performance. It’s pure marketing speak that confuses concepts and seeks to mislead end users and software purchasers. A surprising number of companies are guilty of this, and I wonder if it’s because each company is copying one another’s marketing kit. (Or at the very least, a similarly non-technical person is making the marketing materials).

In the healthcare space, the “zero variability” phrase seeks to highlight that there is clinical variation (and hopefully the AI solution has less variation). But the phrase confuses accuracy with precision. A broken clock is infinitely precise - it’ll always show the same time irregardless of when it actually is - but is not very accurate. As a physician, I am hoping to be both accurate and precise, but likely would prefer accuracy over precision. (Better to be right than stubborn).

As a thought experiment, a company could build software that doesn’t even look at the images and always return the result of “Normal”. If they always provide the same answer, the variability is zero, but this doesn’t speak to whether the software is accurate or reliable. This is the wrong benchmark, and I’d rather AI software look at the inputs and focus on accuracy. The “zero variability” phrase is trying to say the AI software is deterministic (the same input would always result in the same output), but that actually also isn’t always true either.

Take the example of echo measurement automation - there are actually many (somewhat subjective) steps involved in the derivation of every single echo measurement. From an imaging study of ~100 videos, you have to do view selection and choose a particular video to perform the downstream analysis. Even after choosing the video, any given video often has 3 - 4 distinct heart beats to choose from for further downstream analysis. Even after choosing the heart beat, the selection of the video frame can be quite subjective. In each of these steps, there is actually not necessarily a clear right or wrong - multiple choices could be equally appropriate. An echo video is approximately 50 Hz and a difference of 0.02s can mean imperceptible different but similar multiple frames of the same video that would be appropriate to annotate (however, as we’ve previously shown, lead to significant variation on downstream measurements).

It seems unreasonable to have zero variability, but there’s limited evidence or data on how the frames and videos are chosen. In echocardiography, we benefit from a lot of data and there are many similar or identical videos/heart beats/frames that one can choose from. I can guarantee that these “small” choices (of which there really might not be an absolute right or wrong) lead to different measurements and non-zero variability. Ultimately, its a sleight of hand as one pushes the variability upstream and only highlight a small part of a pipeline of cascading dependencies. Even if an particular measurement has low variance, the software design for view selection and frame selection definitely does have high variance - and if anything, much less clarification of exactly what it’s doing or how it was evaluated.

How to evaluate AI models in medicine

So with that critique in mind, the question remains - how should a clinician evaluate what is a good AI model? When is software good enough to use in clinical practice? Current FDA approval of AI technologies require only limited validation, with the vast majority of algorithms cleared on few-site, retrospective, and non-blinded data. In addition, most technologies in echo AI are semi-automated, meaning the onus and responsibility still lies within the end user and the AI does not to take on the liabilities of inaccurate assessments. As such, potential end users of echo AI must critically evaluate the performance, specifications, and design of these algorithms for suitability in their particular echo labs. We propose a few key questions that should be answered in understanding the performance of these algorithms.

Understand the training data

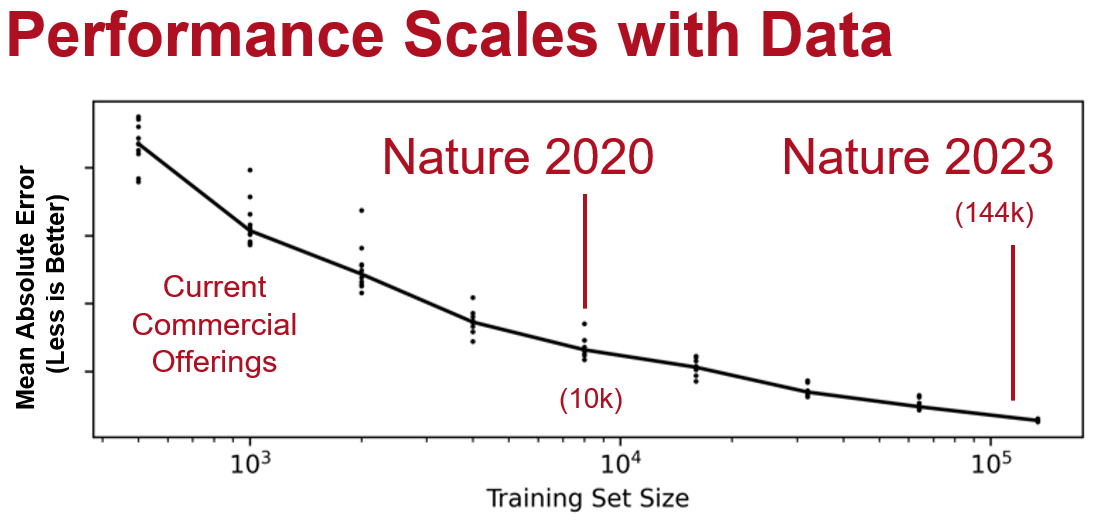

In AI, the quality and quantity of training data is crucial for model performance, (although quantity is by far the most important metric). In almost all cases, AI model accuracy has been shown to scale with the number of training examples. Personally, I’ve noticed step functions in the qualitative improvement of AI models when able to train at scale. This is even more important than the particular details of a model architecture (and also oftentimes vendors won’t tell you since they are embarrassed to say its just an off-the-shelf architecture rather than something deeply proprietary). My experience has been, when trying to design clever architecture improvement, the problem is better solved when we just get 10x more data and re-train the original model. This is also the reason why most self-supervision or semi-supervision method paper benchmarks are in the low data regime (it’s much more diminishing returns with a reasonably large dataset size and a fundamental assumption of this area of research is you can’t go out and get more data easily).

Similarly, there is increased understanding that undertrained models perform less well in poorly represented populations, particularly underserved populations for which models might not have enough training examples, as well as different image settings (kernel size, frame rate, acquisition habits, and etc). It is the responsibility of any AI algorithm vendor to disclose the type of and amount of training data used to produce the model. As an end user, consider whether their training data is similar to your site or if the data is “out-of-domain” and not reflective of your institution’s own experience. In clinical medicine, each institution develops site-specific workflows and protocols (for example, Mayo Clinic flips the A4C echo view on acquisition), and while could be easy to a human clinician to recognize that change, many AI systems are not built with these human-trivial variation in mind.

Test with your own data

Impressive demos are almost always cherry picked, and often are shown on high quality images for which analysis is easy. The demos often aren’t done it in real time, rather with selected studies that are known to produce the “right results”. When evaluating an AI product, ask to see its performance on your site’s data without manual adjustment or curation. It is of paramount importance that how validation is done is well documented and disclosed for AI models (as there is always a small risk that the training set and test set has cross-over and result in artificially high performance metrics). A key tenet of good AI model training practices is external validation, only recently has the FDA required external validation in at least three sites. Additionally, similar to clinical trials, there are good standard practices that improve the rigor of the validation. For example, blinding and randomization are foundational tenets to clinical trial design, as it minimizes confounding and the risk for bias among participants. Ask if the validation was done in a blinded setting (how? without prompting or anchoring? Was there a comparison arm or even if “blinded”, everyone knew it was always AI?)

Do you really want an AI that only works on pristine images and without pathology? When testing with your own data, actually include pathology that are common in clinical practice. For example, include videos that have pericardial effusions and check that the AI doesn’t confuse the pericardial effusion for the left ventricle blood pool. Find videos of lower quality (but representative of what can happen in an echo lab) including ones where the sonographer moves his or her hand in the acquisition. Does the AI handle failure modes gracefully? For echocardiography in particular, we’ve noticed models are high quality when it works well across all frames of a video (there is only small changes frame to frame, so if the measurement jitters and jumps around along, that intuitively is an uncertain model and cannot be providing an high precision measurement.) Many companies will only show measurement on one or two frames of a video, but that’s a much lower bar, and significant frame-to-frame variation belies a low quality model that will not stand to close scrutiny.

In Summary

Poorly designed AI models are surprisingly brittle, complicated in the most unintuitive ways, that busy clinician environments are not the place for the first time for an engineer to encounter a random scenario or unorthodox approach. The excitement in AI is for its ability to handle the full gamut of clinical imaging - not just the easy studies. It’s actually an open secret in the industry that models that work well for high quality images doesn’t always work as intended for low quality images (even though to a clinician they would both be clinically adequate for interpretation). It’s already a breeze (and joy) to read the crisp images of a well-acquired study, but the clinical judgement occurs in the trenches with the challenging views and confusing scenarios. How software handles edge cases, unexpected (read biologically implausible) results, and adversarial examples will really distinguish between good and back AI models.

When trained with data on only hundreds of patients, an AI is unlikely to have seen a large pericardial effusion, mass effect, or hundreds of different scenarios that are not uncommon but can be unanticipated in general AI model design. Just as you don’t want to be the first users of version 1.0 software, especially for AI software, and undoubtedly there’s going to be growing pains with respect to accuracy and value proposition in the AI in healthcare. Given the need for rapid iteration, it’s important for companies to work closely with end users to improve algorithms (rather than now, which is wining and dining KOLs and hoping they can buy good reviews).