InVision Precision LVEF AI echo algorithm is now FDA cleared

Only AI-LVEF algorithm shown in blinded RCT to save time and improve precision

On November 27, 2019, I posted the preprint for a video-based LVEF deep learning model. While the work had already been ongoing for about a year (I posted a teaser video in September), these were the first two public artifacts of an ongoing project that culminated in FDA 510k clearance last month. Press release here.

In the intervening 4 years, we’ve refined our approach, improved the algorithm, added additional training data, and rigorously tested our model. We’re proud to say we’re the only LVEF algorithm that has been tested in a blinded, randomized clinical trial. Evaluated using the exact same clinical workflow and software that clinicians use day-to-day, InVision Precision LVEF was shown to save 2 minutes of sonographer time and 10 seconds of cardiologist time per study, and results in a more accurate and reproducible measurement of heart function.

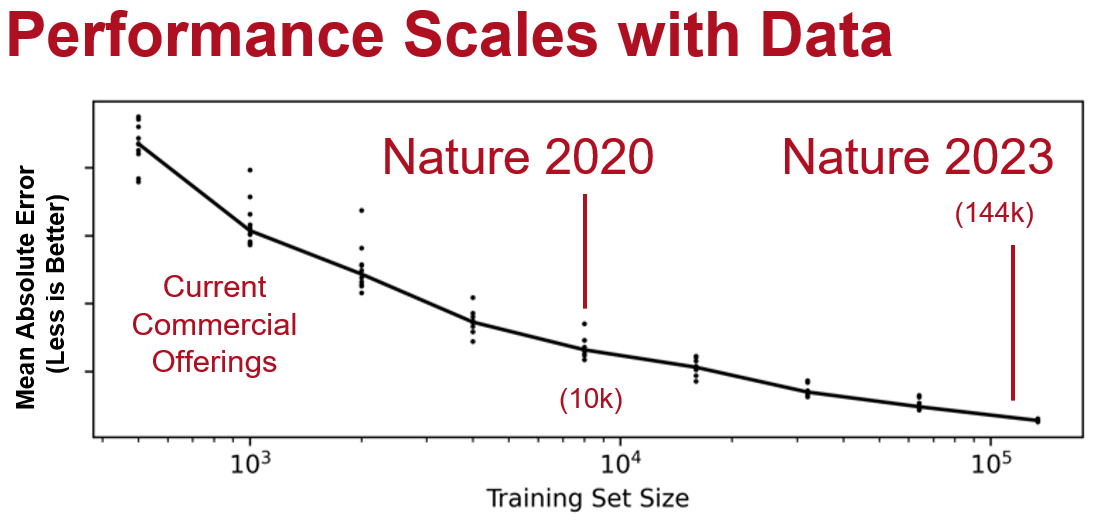

Not all AI models are the same (both in terms of design as well as validation), in the same way one can’t say a medical intern and echocardiographer are same, even though both are human assessors of LVEF. Many existing commercial LVEF models are trained on 600-1000 training examples, while our model was trained on more than 144,000 training examples. This is the equivalent of being in an echo lab for a week vs. being in an echo lab every day for 3-5 years. In all of AI, there’s a strong relationship between amount of training data and the performance of the model - and we think this is just as true for AI models of LVEF.

Finally, because the team comes from academic roots, we have thought hard about how best to evaluate and design medical AI models. We are one of the few teams that has publicly released code and training data to really show the internal workings and design of the model. Frankly put, we think we have the best AI-LVEF model in current existence, and we’d love to it put to use to improve patient care.

As a cardiologist, how often do you see significant variation in LVEF between what is reported in an OSH report vs. what you see on the repeat study obtained locally? In patients with serial echocardiograms, particularly in critical junctures like before starting chemotherapy, can we get more accurate assessments of change over time? With InVision Precision LVEF, we hope that many of these diagnostic dilemmas can be solved.

Value Capture Vs. Value Creation

Now that our LVEF device is FDA cleared, we are excited for deployment into clinical practice. We recognize there are already many great companies out tackling a variety of echo automation tasks, but we think LVEF is particularly critical and important in clinical decision-making. Similarly, we believe in facilitating accurate assessment of LVEF across cardiac ultrasound in all practice patterns, and hope to produce a solution that can allow more comparison between hospitals, physicians, and different time points.

Thus, we are offering InVision Precision LVEF for free.

Don’t get me wrong - I think there is tremendous value in our algorithm. I really believe it can improve patient care, and more than other algorithms, I think we have the data to back that up. But at the same time, we don’t think this is the place to do value capture - rather we want to focus on value generation. We think there are real use cases where assistance in evaluation of LVEF can aid in the management of a difficult clinical situation, and bring additional thought to a complex case.

We also want clinicians to be more used to the idea of using AI in the echocardiography workflow, and finding situations where clinicians can obtain value. We don’t think all algorithms are the same, and want end users to have the opportunity for side-by-side comparison and critical appraisal of different algorithms for the same tasks [1]. As co-chair of the ASE ImageGuideRegistry, one of the things I’m really pushing for is collecting a set of data to benchmark AI-echo algorithms and publicly display the performance in a neutral 3rd party way.

But in this context, over the coming months, we will be working with PACS system vendors and providers for direct integration and deployment into hospital systems. Our main ask for many of these agreements is to offer such technology for free for the end user (at least for the first 3 years) to demonstrate and allow echo labs evaluate it on their own data and types of studies. If operating costs are too high [2], we might charge a modest fee, but ultimately our goal is to prioritize wider adoption than revenue generation for this model.

Next Steps

If you’ve gotten to this far, I assume you care about echo and AI on some level. If that’s the case, check out our online demo, now live. You can upload your own studies to see how the algorithm would work on your studies, and also various ways we would integrate with the PACS system.

If you also some leadership role or procurement role in your echo lab and you’re interested getting InVision Precision LVEF sooner, consider signing up for our waitlist. We’re collecting some information on what are the most common PACS systems and set-ups potential end-users have deployed to prioritize our engineering resources, and if you have a PACS system thats on our short-term roadmap, we’ll contact you on how to get started sooner!

[1] In fact, one of my greatest fears is too much hype in the space, and early adopters will get disenchanted with beta products and assume all AI models are the same.

[2] We are currently brainstorming potentially more than 20k studies/year being processed at one site as a potential threshold