As part of my AI research, I frequently get asked about using cardiac MRI measurements not just as a comparison, but as “The Ground Truth”. The idea is that measurements are most accurate on cardiac MRI imaging rather than echocardiography or CT or other cardiac imaging modalities, and thus MRI should be the reference standard for assessing the accuracy of cardiac measurements. While I agree we should compare across modalities and I think cardiac MRI is extremely useful, I don’t think of cardiac MRI as the gold standard and think this idea is more dogma than science. Here’s why.

Medical imaging more limited by human variation than image quality

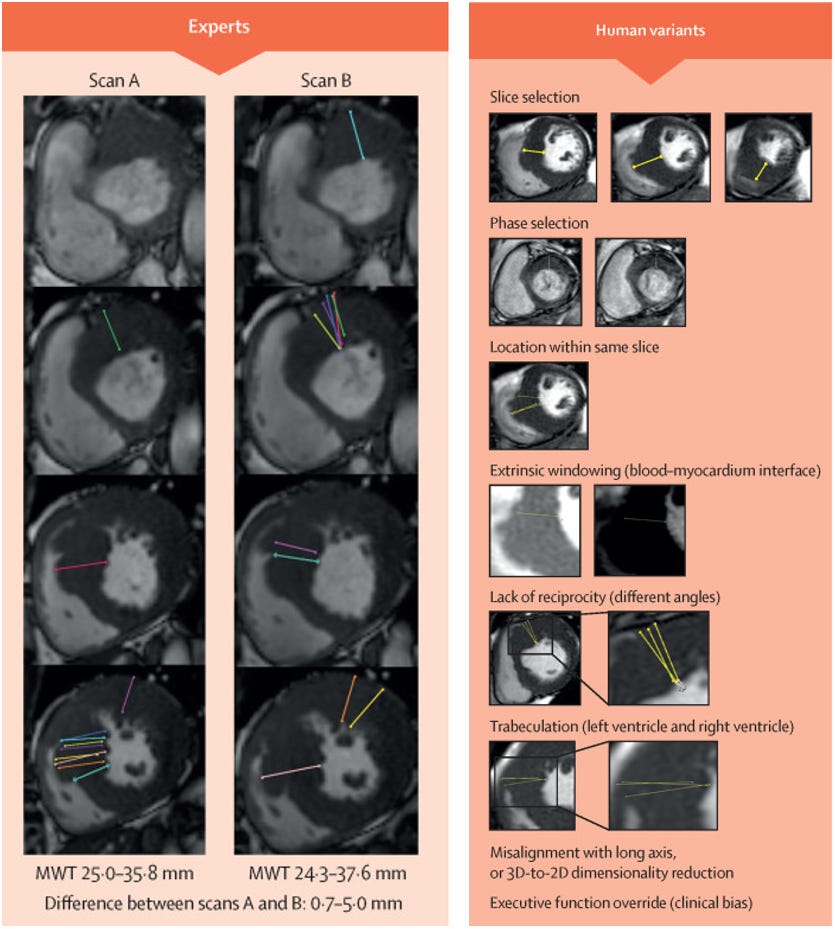

As a cardiac imager, I fully believe in the beauty of cardiac MRI, both in terms of image quality as well as elegance of the underlying science of MRI [1]. But because of why it’s so pretty, its easy to lead to shallow thinking, where pretty pictures equates to better measurements. There’s undoubtable value in having high a signal-to-noise (SNR) ratio, which is both reflected in “how pretty an image looks” and is valuable identifying the boundary of blood and muscle for measuring wall thickness. But a central thesis of medical imaging (and my research program), is in the current state, variations in medical impressions is much more driven by human variation than image quality. After all, this is why it’s important to have an expert imager review the studies and have a physician critically think about the scenario than rotely going through the images.

This is most commonly seen in studies of human-to-human variation in cardiac MRI assessment. Cardiac MRI is very expensive, so researchers have not done as many test-retest comparisons of cardiac MRI measurements or repeat measure of MRI, but when it has been done, Clinician assessments of MRI is no more precise than clinicians for echocardiography, and is actually less precise than AI for echocardiography. Even simple measurements such as wall thickness and LVEF on MRI have R2 as low as 0.32 - 0.58 for various measurements. When using such a noisy instrument, how can you decide which measurement is your “ground truth”?

MRI software is less rigorously tested

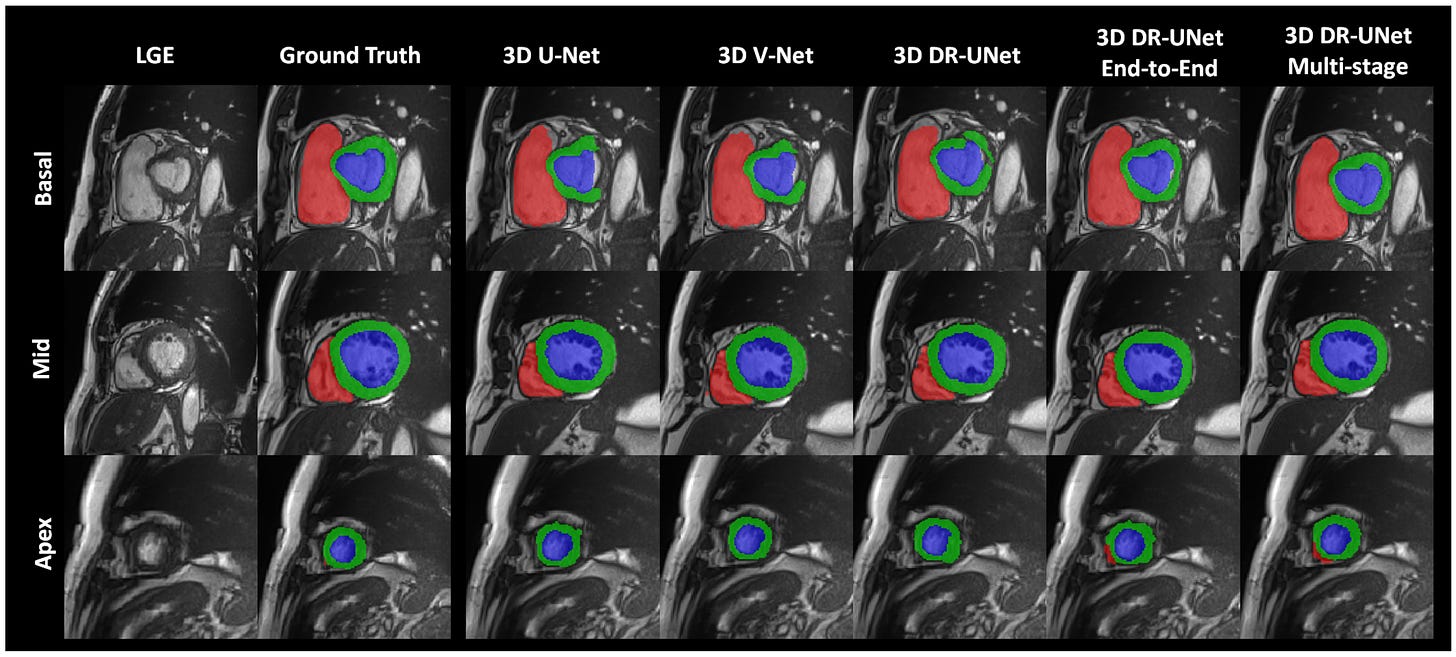

The other challenge with cardiac MRI, which one at first glance might think is a plus, is the sheer number of images obtained in cardiac MRI. To calculate LVEF, the short axis stack often has a series (~10) images that must be individually annotated and measured at both systole and diastole. Because it is so labor intensive, the culture in cardiac MRI is such that almost no one actually does it manually. This isn’t used to besmirch Circle, Siemens, or any of the great software developers, but cardiac MRI interpretation has already been usurped by automation, and closed source, black-box, proprietary automation at that. And there is TREMENDOUS VARIATION BETWEEN THE DIFFERENT PRODUCTS, (sometimes even different versions of the same package). None of the commonly used software packages have undergone clinical trials, share code, or have had nearly the scrutiny that AI for echocardiography have gone [2].

MRI is less evidence driven than echo

When we think of diagnosis, we often have to think about the downstream treatment and how clinical management is affected. MRI is valuable for the opportunity to visualize "scar”, which has a strong correlation with important clinical outcomes. LVEF is an important measurement because it defines heart failure, and the choice of important pharmacologic and device therapies. And while LVEF can be assessed by many ways (SPECT, MUGA, CT, MRI, or echocardiography) and often clinicians use the measurement interchangeably to decide downstream treatment, However, Whether you’re using ACEIs (Val-HEFT), ARBs (RALES), ARNIs (PARADIGM-HF), SGLT2i (DELIVER), beta blockers (COMET), all (99.99%) practice changing clinical trials rely on the echocardiographic measurement of cardiac function.

In fact, if we had to compare echo vs. MRI measurements for their clinical utility (greatest connection to actionable therapeutics), its rather MRI should be benchmarked by echo rather than the other way around. As the more commonly used, evidence based, widely accepted modality, an echo measurement of LVEF of 35% has a stronger evidence of treatments that can improve a patient’s condition. Given the preponderance of data, it might be fair to say echo should be the Gold Standard and the Reference Measurement. With higher temporal resolution and less prone to the artifacts that come with it, echocardiographic measurements are more robust to dynamic clinical scenarios [3].

The room where it happens

Speaking as someone whos knows how the sausage is made (seen how both MRI and echo is read out at multiple institutions), it’s almost always the case than the MRI reader has read the echo report before making their assessment. The luxury of being the second reader cannot be understated, nor can the anchoring bias of knowing a previous measurement. To my knowledge, there is no evidence that MRI measurements have a stronger correlation with pathology or anatomic measurements than echo, CT, or other forms of cardiac imaging.

To the degree that echocardiographic interpretation is not given feedback with cardiac MRI measurements in routine clinical practice, it is a weak assumption that a better AI-echo algorithm will have a better correlation with MRI measurement than standard echo measurements. To make the cardiac MRI assessment the primary outcome of any AI clinical trial (for echo) would be simply flipping a coin/leaving things up to chance. Choosing a bronze standard means that AI can be even better than the reference standard, and a big part of AI research is recognizing where there is clinical variation and how to best choose a training label. Given the strong relationship with performance and training dataset size, as someone training AI models, I strongly refer 1M echo videos over 1k MRI images as the training data and think this will be the dominant strategy even with same downstream benchmarks.

[1] I first learned about MRI in undergrad biochemistry class, where we had to derive findings from first principles, but the sheer elegance of the technology is unparalleled. A triumph of technology and science, MRI technology won the 2003 Noble Prize.

[2] Part of why I’m a huge proponent of clinical trials and code sharing is I don’t want echo software to go the way of MRI software.

[3] We’ve shown that even small (30ms differences of which frame to annotate) in echo videos can lead to large variation in downstream measurements.

Thank you for sharing the perspective. It was a pleasure to read.

Do you believe that, given ample training data and labels, cardiac MRI can offer supplementary insights into cardiac function beyond just scar detection?